Overview:

In this tutorial, I would like to demo Timeout Pattern, one of the Microservice Design Patterns for designing highly resilient Microservices with Linkerd Service Profile on a Kubernetes cluster.

Need For Resiliency:

Microservices are distributed in nature. When you work with distributed systems, always remember this number one rule – anything could happen. We might be dealing with network issues, service unavailability, application slowness etc. An issue with one system might affect another system behavior/performance. Dealing with any such unexpected failures/network issues could be difficult to solve.

Ability of the system to recover from such failures and remain functional makes the system more resilient. It also avoids any cascading failures to the downstream services.

Timeout Pattern:

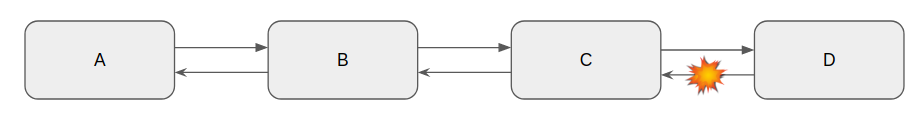

We do experience intermittent application slowness once in a while for no obvious reasons. It could have happened to all of us even for applications like google.com. In Microservice architecture, when there are multiple services (A, B, C & D), one service (A) might depend on the other service (B) which in turn might depend on C and so on. Sometimes due to some network issue, Service D might not respond as expected. This slowness could affect the downstream services – all the way up to Service A & block the threads in the individual services.

As it is not uncommon issue, It is better to take this service slowness/unavailability issues into consideration while designing your Microservices by setting a timeout for any network call. So that we could have the core services working as expected & responsive even when the dependent services are NOT available.

Sample Application:

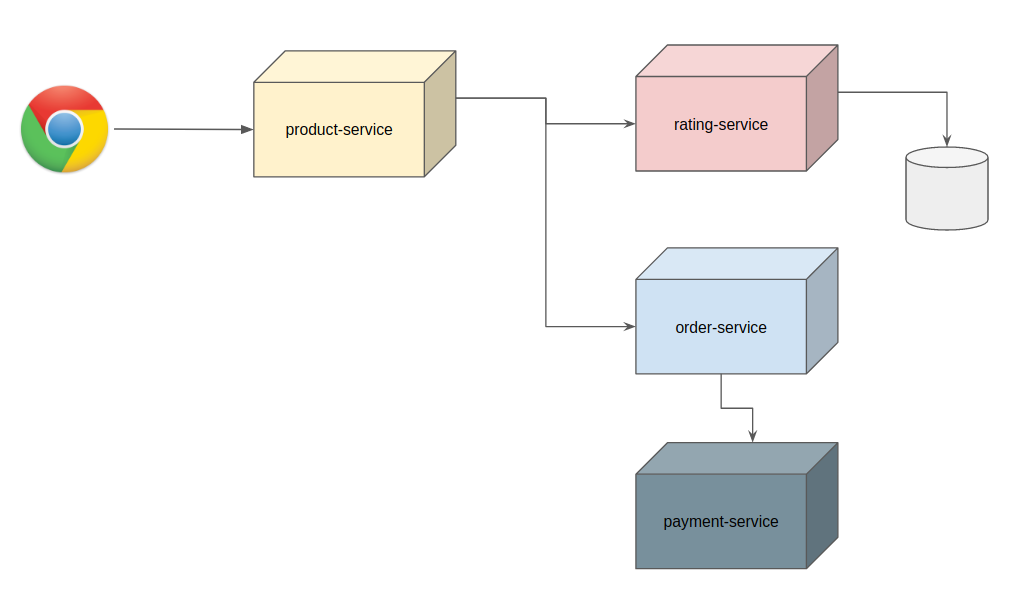

Lets consider this simple application to explain this timeout pattern with Linkerd Service Profile.

- We have multiple microservices as shown above

- Product service acts as product catalog and responsible for providing product information

- Product service depends on the rating service.

- Rating service maintains product reviews and ratings. It is notorious for being slow due to the huge amount of data it has.

- Whenever we look at the product details, product service sends the request to the rating service to get the reviews for the product.

- We have other services like account-service, order-service and payment-service etc which is not relevant to this article discussion.

- Product service is a core service without which the user can not start the order workflow.

Project Set Up:

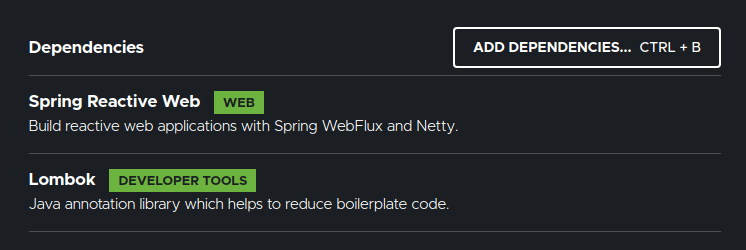

Lets first create a Spring Boot project with these dependencies.

I create a multi module maven project as shown here.

If the user tries to see a product, let’s say product id 1, then the product-service is expected to respond like this by fetching the ratings as well.

{

"productId": 1,

"description": "Blood On The Dance Floor",

"price": 12.45,

"productRating": {

"avgRating": 4.5,

"reviews": [

{

"userFirstname": "vins",

"userLastname": "guru",

"productId": 1,

"rating": 5,

"comment": "excellent"

},

{

"userFirstname": "marshall",

"userLastname": "mathers",

"productId": 1,

"rating": 4,

"comment": "decent"

}

]

}

}You can take a look at the complete source code of this demo at Github which is shared at the end of this article.

The important thing is to note here is – We will have 2 products for this demo. product id 1 will just load fine quickly. product id 2 takes time up to 5 seconds. This slowness affects the upstream services.

@RestController

@RequestMapping("ratings")

public class RatingController {

@Autowired

private RatingService ratingService;

@GetMapping("{prodId}")

public ProductRatingDto getRating(@PathVariable int prodId) throws InterruptedException {

if(prodId == 2 && ThreadLocalRandom.current().nextInt(0, 10) > 3){

Thread.sleep(5000);

}

return this.ratingService.getRatingForProduct(prodId);

}

}Application Demo:

I have dockerized the applications and pushed them into docker hub.

version: '3'

services:

product-service:

build: ./product-service

image: vinsdocker/timeout-demo-product-service

ports:

- 8080:8080

environment:

- RATING_SERVICE_ENDPOINT=http://rating-service:7070/ratings/

rating-service:

build: ./rating-service

image: vinsdocker/timeout-demo-rating-serviceSave the above content in a yaml file. Run this command to bring the applications up.

docker-compose upSend the below requests.

http://localhost:8080/product/1

# This will be slow >50% of the times

http://localhost:8080/product/2The rating-service slowness affects product-service performance.

Kubernetes Deployment:

This is the deployment manifest for Kubernetes for the above application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: rating-app

spec:

replicas: 1

selector:

matchLabels:

app: rating-app

template:

metadata:

labels:

app: rating-app

spec:

containers:

- name: rating-app

image: vinsdocker/timeout-demo-rating-service

---

apiVersion: v1

kind: Service

metadata:

name: rating-service

spec:

selector:

app: rating-app

ports:

- port: 7070

protocol: TCP

targetPort: 7070

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: product-app

spec:

replicas: 1

selector:

matchLabels:

app: product-app

template:

metadata:

labels:

app: product-app

spec:

containers:

- name: product-app

image: vinsdocker/timeout-demo-product-service

env:

- name: RATING_SERVICE_ENDPOINT

value: http://rating-service:7070/ratings/

---

apiVersion: v1

kind: Service

metadata:

name: product-service

spec:

selector:

app: product-app

ports:

- port: 8080

protocol: TCP

targetPort: 8080- nginx Ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-ingress

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/configuration-snippet: |

proxy_set_header l5d-dst-override $service_name.$namespace.svc.cluster.local:$service_port;

grpc_set_header l5d-dst-override $service_name.$namespace.svc.cluster.local:$service_port;

spec:

rules:

- host: vins.example.com

http:

paths:

- backend:

serviceName: product-service

servicePort: 8080Once we deploy this on a Kubernetes cluster, we can send below requests to see the same behavior. (Here I have updated /etc/hosts file with the IP and host mapping).

http://vins.example.com/product/1

# This will NOT be slow. max wait time 1s

http://vins.example.com/product/2At this point, we have not fixed the issue. The issue is still present. The only way to fix this seems to be fixing the code with some timeout. But code change requires some testing, going through all the dev / qa / stg cycles etc.

Lets see how Linkerd Service Profile can help here.

Linkerd:

Linkerd is a light weight service mesh for k8s. Installing Linkerd is just a 5 mins work. Detailed steps are here.

Once you have Linkerd setup in your cluster, Run this command to redeploy our app.

linkerd inject deployment.yaml| kubectl apply -f -Linkerd Service Profile:

Linkerd Service Profile is a CRD (Custom Resource Definition / K8s extension). It can provide additional information about a service & allows us to configure timeout, retry logic etc w/o touching the code.

We create a service profile as shown here for the rating service in a way that any rating requests should respond within 1 second. It will be automatically timed out. Product-service will not wait for ever.

apiVersion: linkerd.io/v1alpha2

kind: ServiceProfile

metadata:

name: rating-service.default.svc.cluster.local

namespace: default

spec:

routes:

- condition:

method: GET

pathRegex: /ratings/[^/]*

name: GET /ratings/{id}

timeout: 1sApply this on your k8s cluster. Send the same requests to the product service as shown here.

http://vins.example.com/product/1

# This will NOT be slow. max wait time 1s

http://vins.example.com/product/2After the above service profile set up, if any requests to product id 2 to takes more than 1 second, Linkerd will timeout the request and we get below response.

{

"productId":2,

"description":"The Eminem Show",

"price":12.12,

"productRating":{

"avgRating":0.0,

"reviews":[

]

}

}Here the rating and reviews are empty. But that is ok as it is not critical. The product itself is not available then we will have very bad user experience and could impact the revenue.

Pros:

- In this approach, we do not block any threads indefinitely in the product service

- Any unexpected events during network call will get timed out within 1 seconds.

- We achieved this without doing any code change in the actual app by moving the timeout logic to the side car.

- Core services are not affected because of the poor performance of the dependent services.

Summary:

Timeout Pattern is one of the simplest Microservice Design Patterns for designing resilient Microservices. Introducing timeout solves the network related issues partially.

Read more about other Resilient Microservice Design Patterns.

- Resilient Microservice Design Patterns – Retry Pattern

- Resilient Microservice Design Patterns – Circuit Breaker Pattern

- Resilient Microservice Design Patterns – Bulkhead Pattern

- Resilient Microservice Design Patterns – Rate Limiter Pattern

The source code is available here.

Happy learning 🙂